Nathaniel HansonI am a Technical Staff Member at the Lincoln Laboratory in the Human Resilience Technology (HuRT) group, part of MIT. I research the intersection of robotics and remote sensing applied to disaster response, environmental monitoring, and unstructured environments. My PhD advisors were Taşkın Padır and Kris Dorsey. I am always excited to foster new collaborations. If you have any questions about my research, please feel free to contact me. Email / GitHub / Google Scholar / LinkedIn / CV |

|

BiographyWithin the HADR group, I lead a portfolio of research focused on the intersection of robotics, disasters, and remote sensing. My PhD research in the Institute for Experiential Robotics focused on making hyperspectral imaging and near-infrared spectroscopy lighter and more cost-effective for use in robot-centric terrain and object understanding. I coined the term "material-informed robotics" to describe a novel reframing of robotics with material perception at its core. I am active in collaborative efforts with MIT campus, including the Beaver Works Summer Institute as the lead instructor for the Unmanned Aerial Vehicle Racing program and the Learning Machines Training course with the MIT Media Lab. I have an MS in Computer Science from Boston University and a BS in Computer Engineering with a minor in Theology from the University of Notre Dame, where I worked in Jane Cleland-Huang's lab. |

Research DirectionsI'm interested in hyperspectral imaging, material identification, multi-modal sensing, remote sensing, and robotics. A good summary of my research philosophy and interests is provided in my dissertation defense. PublicationsPeer-Reviewed conference and journal papers are listed below. Representative papers are highlighted. |

|

A Soft, Open-Source Growing Robot for Urban Search and RescueAntonio Alvarez Valdivia, Ciera McFarland, Ankush Dhawan, Chad Council, Robert Reeve, Margaret Coad*, Nathaniel Hanson* In Preparation, 2025 As a cumulative exercise of all our development with vine robots, we release a field-validated, open-source soft growing robot designed for unstructured terrain exploration, with a complete methodology including fabrication instructions, ROS2-based software, and deployment protocols. By providing open-source code, CAD files, and field data, we aim to advance reproducibility in soft robotics and enable real-world adoption for disaster-response applications. |

|

An Improved Low Friction Body and Camera Mount for Soft Growing RobotsAntonio Alvarez Valdivia, Ciera McFarland, Ankush Dhawan, Chad Council, Robert Reeve, Margaret Coad, Nathaniel Hanson In Preparation, 2025 We created a novel triangular roller camera mount for vine robots that reduces internal resistance during growth, developed through an iterative design process informed by failure analysis. Using a custom testbed to benchmark mount designs, we show that our approach achieves the lowest tail tension and most consistent performance, providing a practical pathway toward more reliable tip-mounted tools for soft-growing robots. |

|

On Steerability Factors for Growing Vine RobotsCiera McFarland, Antonio Alvarez Valdivia, Nathaniel Hanson, Margaret Coad Under Review, 2025 We investigate the factors that influence the steerability of vine robots, which extend by everting material from their tips. Through experiments on payload, length, pressure, diameter, fabrication method, and actuator-to-body pressure ratios, we find that steerability decreases with tip weight, increases with length, peaks at intermediate pressures, and depends strongly on fabrication and pressure ratios—insights that inform the design of field-ready vine robots for search and rescue. |

|

RubbleSim: A Photorealistic Structure Collapse Simulator for Confined Space MappingConstantine Frost, Chad Council, Margaret Coad, Nathaniel Hanson IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), 2025 code / Despite well-reported instances of robots being used in disaster response, there is very scant published data on the internal composition of the void spaces within rubble piles. To overcome this access challenge, we present RubbleSim – an open-source, reconfigurable simulator for photorealistic void space exploration. The design of the simulation assets is directly informed by visits to numerous training rubble sites at differing levels of complexity. |

|

Capacitive Sensor Design for Soft Robots and Smart GarmentsDamla Leblebicioglu, Immanuel Ampomah Mensah, Joseph Allen, Ahilesh Vadivel, Nathaniel Hanson, Kristen L. Dorsey IEEE International Conference on Solid State Sensors and Actuators (TRANSDUCERS), 2025 We design capacitive sensors for soft robots and smart garments that balance high displacement sensitivity with strong rejection of electrical disturbances. Through finite element modeling, fabrication, and wireless board integration, we demonstrate electrode configurations that reduce disturbance responses by up to 150× while maintaining practical sensitivity, advancing reproducible capacitive sensing for soft robotics and wearable systems. |

|

Field Calibration of Hyperspectral Cameras for Autonomous Terrain InferenceNathaniel Hanson, Benjamin Pyatski, Samuel Hibbard, Gary Lvov, Oscar De La Garza, Charles DiMarzio, Kristen Dorsey, Taşkin Padir IEEE Robotics & Automation Letters, 2025 Towards the integration of hyperspectral imaging in field robotics, we present a novel system architecture for collecting and registering snapshot hyperspectral data from multiple cameras mounted to a mobile robot. A calibration algorithm is developed to generate the camera white reference from the signal of a point spectrometer without the need to periodically image a reflectance calibration target. Our approach enables the calibration of the system under varying illumination conditions. As a practical demonstration of the potential of hyperspectral imaging in robotics, we show the calculation of vegetative health indices and soil moisture content from data collected from a mobile off-road vehicle. |

|

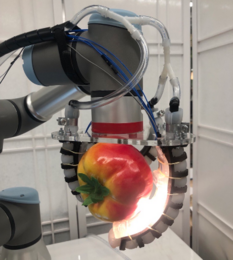

SCANS: A Soft Gripper with Curvature and Spectroscopy Sensors for In-Hand Material DifferentiationNathaniel Hanson*, Austin Allison*, Charles DiMarzio, Taşkın Padır, Kristen Dorsey IEEE Robotics & Automation Letters, 2025 We present the SCANS (Simultaneous Curvature and Near Infrared Spectroscopy) system – a first of its kind sensor that combines advances in soft optical sensing and robot-centric spectroscopy for a high-throughput, multi-functional sensor. This work addresses limitations in soft sensing by leveraging light in two distinct ways, spectral reflectance and curvature-induced loss, to identify in-hand objects. We also show the gripper’s ability to distinguish objects during pre-grasp and contact stages of a grasping cycle. |

|

Cuvis.Ai: An Open-Source, Low-Code Software Ecosystem for Hyperspectral Processing and ClassificationNathaniel Hanson, Philip Manke, Simon Birkholz, Maximilian Mühlbauer, Rene Heine, Arnd Brandes IEEE Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), 2024 paper / code / cuvis.ai is a software toolkit designed to facilitate the development of artificial intelligence (AI) and machine learning applications for hyperspectral measurements. This toolkit enables the creation of a graph from a set of different preexisting supervised and unsupervised nodes. Furthermore, it provides data preprocessing and output postprocessing, thus offering a comprehensive package for the development of AI capabilities for hyperspectral images. This repository is aimed at companies, universities and private enthusiasts alike. Its objective is to provide a foundation for the development of cutting-edge hyperspectral AI applications. |

|

Improving Vine Robot Teleoperation Via Gravity-Aligned Camera ReorientationCiera McFarland, Sarah Taher, Nathaniel Hanson*, Margaret Coad Under Review, 2024 Soft, everting vine robots can carry a tip-mounted camera and steer in highly complex environments. However, as with many continuum robots, their bodies can twist and flip upside down as they follow a path. This can result in the camera feed tilting to angles that are challenging for a user to interpret, especially when relying on the camera feed alone to guide the robot along a desired path. We propose a new algorithm that reorients the tip camera view to always present it to the user with gravity down. Our method also reorients the mapping between the user’s teleoperation inputs and the robot’s motions to align with the camera view the user sees. We perform a human study to validate the controller design and observe reductions in operator’s cognitive load. |

|

Field Insights for Portable Vine Robots in Urban Search and RescueCiera McFarland∗, Ankush Dhawan∗, Riya Kumari, Chad Council, Margaret Coad†, Nathaniel Hanson† IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), 2024 arxiv / video / State-of-the-art vine robots have been tested in archaeological and other field settings, but their translational capabilities to urban search and rescue (USAR) are not well understood. To this end, we present a set of experiments designed to test the limits of a vine robot system, the Soft Pathfinding Robotic Observation Unit (SPROUT), operating in an engineered collapsed structure. SPROUT can grow through tight apertures, around corners, and into void spaces. Our works highlights opportunities and challenges for soft roboticists interested in deploying similar systems in support of the USAR community. |

|

Use-Inspired Mobile Robot to Improve Safety of Building Retrofit Workforce in Constrained SpacesSmruti Suresh, Michael Angelo Carvajal, Nathaniel Hanson, Ethan Holand, Samuel Hibbard, Taşkin Padir IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), 2024 arxiv / The inspection of confined critical infrastructure such as attics or crawlspaces is challenging for human operators due to insufficient task space, limited visibility, and the presence of hazardous materials. This paper introduces a prototype of PARIS (Precision Application Robot for Inaccessible Spaces): a use-inspired teleoperated mobile robot manipulator system that was conceived, developed, and tested for and selected as a Phase I winner of the U.S. Department of Energy’s E-ROBOT Prize. |

|

Forest Biomass Mapping with Terrestrial Hyperspectral Imaging for Wildfire Risk MonitoringNathaniel Hanson*, Sarvesh Prajapati*, James Tukpah, Yash Mewada, Taşkin Padir IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), 2024 arxiv / With the rise in wildfires over the past decade, this paper introduces Hyper-Drive3D, a novel system combining snapshot hyperspectral imaging and LiDAR on an Unmanned Ground Vehicle (UGV) to identify high-risk forest fire areas. By analyzing vegetation and moisture spectral signatures, the system is a step towards proactive, automated forest fire management. |

|

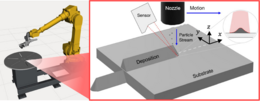

Scott E Julien, Nathaniel Hanson, Joseph Lynch, Samuel Boese, Kirstyn Roberts, Taşkin Padir, Ozan C Ozdemir, Sinan Müftü Journal of Thermal Spray Technology, 2024 paper / Cold spray is a material deposition technology with a high deposition rate and attractive material properties that has great interest for additive manufacturing (AM). Successfully cold spraying free-form parts that are close to their intended shape, however, requires knowing the fundamental shape of the sprayed track, so that a spray path can be planned that builds up a part from a progressively overlaid sequence of tracks. The present study implements a novel in situ track shape measurement technique using a custom-built nozzle-tracking laser profilometry system. |

|

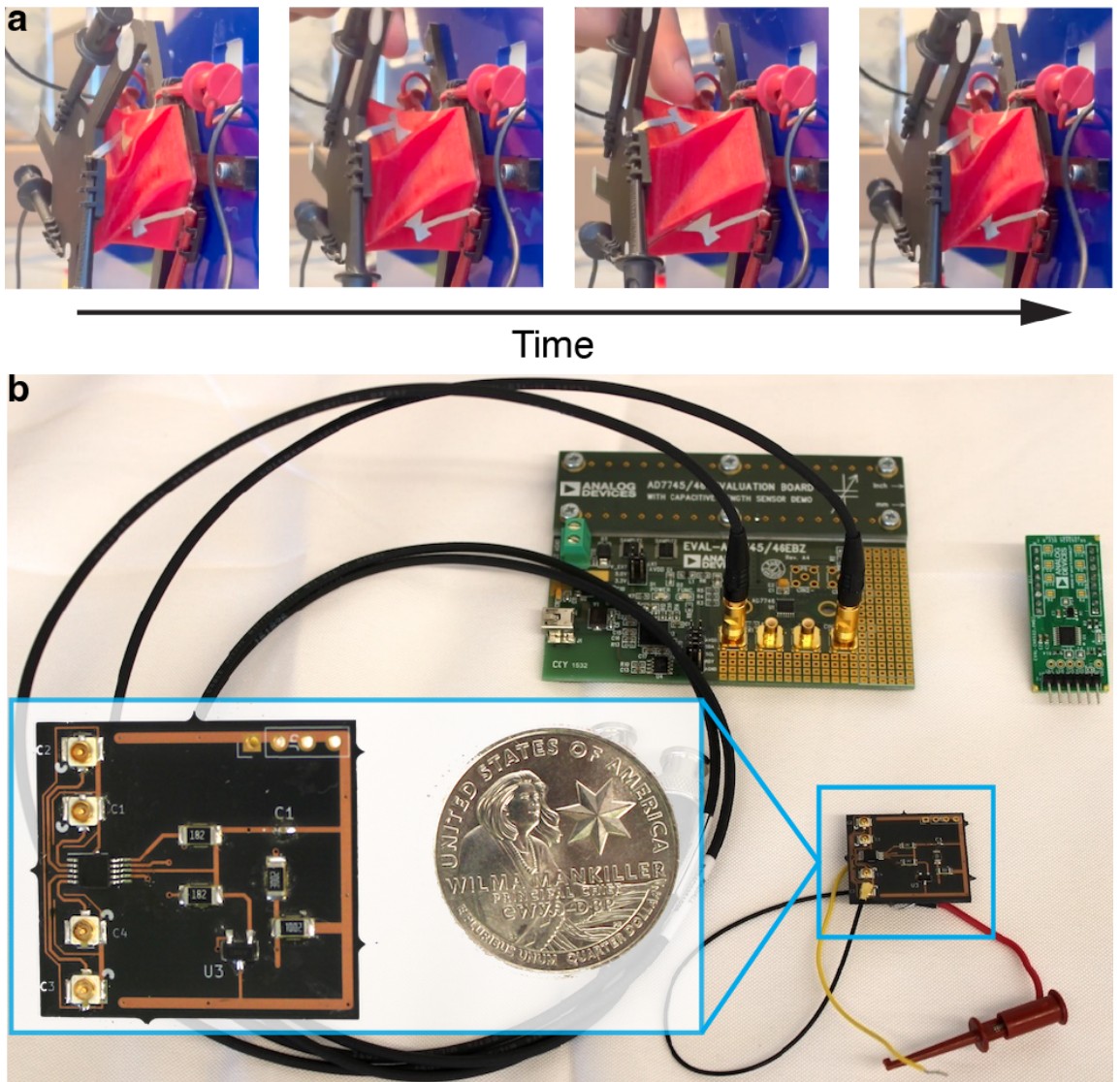

Controlling the Fold: Proprioceptive Feedback in a Soft Origami RobotNathaniel Hanson*, Immanuel Ampomah Mensah*, Sonia F. Roberts*, Jessica Healey, Celina Wu, Kristen L. Dorsey Frontiers in Robotics and AI, 2024 paper / website / We demonstrate proprioceptive feedback control of a one degree of freedom soft, pneumatically actuated origami robot and an assembly of two robots into a two degree of freedom system. Pneumatic actuation, provided by negative fluidic pressure, causes the robot to contract. Capacitive sensors patterned onto the robot provide position estimation and serve as input to a feedback controller. This work contributes optimized capacitive electrode design and the demonstration of closed-loop feedback position control without visual tracking as an input. This approach to capacitance sensing and modeling constitutes a major step towards proprioceptive state estimation and feedback control in soft origami robotics. |

|

PROSPECT: Precision Robot Spectroscopy Exploration and Characterization ToolNathaniel Hanson*, Gary Lvov*, Vedant Rautela, Samuel Hibbard, Ethan Holand, Charles DiMarzio, Taşkın Padır IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2024 arxiv / website / Near Infrared (NIR) spectroscopy is vital in industrial quality control for assessing item purity and material quality. We introduce a novel sensorized end effector and acquisition method for capturing spectral signatures and aligning them with a 3D point cloud. Our approach involves a 3D scan of the object, dividing it into planned viewpoints. Robot motion plans ensure optimal spectral signal quality through controlled distance and surface orientation. The system continuously gathers surface reflectance values, creating a four-dimensional model of the object. We demonstrate this system’s effectiveness in profiling complex geometries, showing improved signal consistency compared to naive methods. |

|

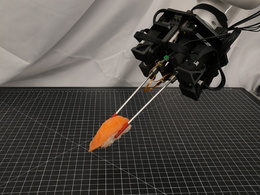

HASHI: Highly Adaptable Seafood Handling Instrument for Manipulation in Industrial SettingsAustin Allison*, Nathaniel Hanson*, Sebastian Wicke, Taşkın Padır International Conference on Robotics and Automation (ICRA), 2024 arxiv / code / website / We propose a novel robot end effector, called HASHI, that employs chopstick-like appendages for precise and dexterous manipulation. This gripper is capable of in-hand manipulation by rotating its two constituent sticks relative to each other and offers control of objects in all three axes of rotation by imitating human use of chopsticks. HASHI delicately positions and orients food through embedded 6-axis force-torque sensors. We derive and validate the kinematic model for HASHI, as well as demonstrate grip force and torque readings from the sensorization of each chopstick. |

|

Battery-Swapping Multi-Agent System for Sustained Operation of Large Planetary FleetsEthan Holand, Jarrod Homer, Alex Storrer, Musheeera Khandeker, Ethan F. Muhlon, Maulik Patel, Ben-oni Vainqueur, David Antaki, Naomi Cooke, Chloe Wilson, Bahram Shafai, Nathaniel Hanson, Taşkın Padır IEEE Aerospace Conference, 2024 arxiv / code / website / This work shares an open-source platform developed to demonstrate battery swapping on unknown field terrain. We detail our design methodologies utilized for increasing system reliability, with a focus on optimization, robust mechanical design, and verification. Optimization of the system is discussed, including the design of passive guide rails through simulation-based optimization methods which increase the valid docking configuration space by 258%. The full system was evaluated during integrated testing, where an average servicing time of 98 seconds was achieved on surfaces with a gradient up to 10 degrees. We conclude by briefly proposing flight considerations for advancing the system toward a space-ready design. |

|

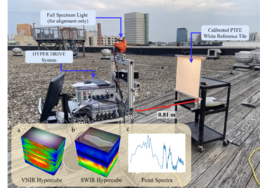

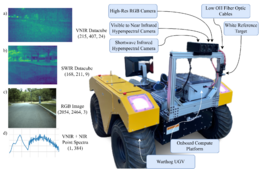

Hyper-Drive: Visible-Short Wave Infrared Hyperspectral Imaging Datasets for Robots in Unstructured EnvironmentsNathaniel Hanson, Benjamin Pyatski, Samuel Hibbard, Charles DiMarzio, Taşkın Padır IEEE Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), 2023 arxiv / data / website / We introduce a first-of-its-kind system architecture with snapshot hyperspectral cameras and point spectrometers to efficiently generate composite datacubes from a moving robot base. Our system collects and registers datacubes spanning the visible to shortwave infrared (660-1700 nm) spectrum while simultaneously capturing the ambient solar spectrum reflected off a white reference tile. We collect and disseminate a large dataset of more than 500 labeled datacubes from on-road and off-road terrain compliant with the ATLAS ontology to further the integration hyperspectral imaging (HSI). |

|

A Vision for Cleaner Rivers: Harnessing Snapshot Hyperspectral Imaging to Detect Macro-Plastic LitterNathaniel Hanson*, Ahmet Demirkaya*, Deniz Erdoğmuş, Aron Stubbins, Taşkın Padır, Tales Imbiriba IEEE Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), 2023 arxiv / code / To address the problem of mismanaged plastic waste, we analyze the feasibility of macro-plastic litter detection using computational imaging approaches in river-like scenarios. We enable near-real-time tracking of partially submerged plastics by using snapshot Visible-Shortwave Infrared hyperspectral imaging. Our experiments indicate that imaging strategies coupled with machine learning classification approaches can lead to high detection accuracy even in challenging scenarios, especially when leveraging hyperspectral data and nonlinear classifiers. |

|

Mobile MoCap: Retroreflector Localization On-The-GoGary Lvov, Mark Zolotas, Nathaniel Hanson, Austin Allison, Xavier Hubbard, Michael Carvajal, Taşkin Padir IEEE International Conference on Automation Science and Engineering (CASE), 2023 arxiv / code / We present a retroreflector feature detector that performs 6-DoF (six degrees-of-freedom) tracking and operates with minimal camera exposure times to reduce motion blur. To evaluate the proposed localization technique while in motion, we mount our Mobile MoCap system, as well as an RGB camera to benchmark against fiducial markers, onto a precision-controlled linear rail and servo. We evaluate the two systems at varying distances, marker viewing angles, and relative velocities. Across all experimental conditions, our stereo-based Mobile MoCap system obtains higher position and orientation accuracy than the fiducial approach. |

|

SLURP! Spectroscopy of Liquids Using Robot Pre-Touch SensingNathaniel Hanson*, Wesley Lewis*, Kavya Puthuveetil*, Donelle Furline, Akhil Padmanabha, Taşlan Padir, Zackory Erickson International Conference on Robotics and Automation (ICRA), 2023 arxiv / code / website / We present a state-of-the-art sensing technique for robots to perceive what liquid is inside of an unknown container. We do so by integrating Visible to Near Infrared (VNIR) reflectance spectroscopy into a robot’s end effector. We introduce a hierarchical model for inferring the material classes of both containers and internal contents given spectral measurements from two integrated spectrometers. |

|

VAST: Visual and Spectral Terrain Classification in Unstructured Multi-Class EnvironmentsNathaniel Hanson, Michael Shaham, Deniz Erdoğmuş, Taşkin Padir IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2022 paper / data / code / To provide mobile robots with the capability to identify the terrain being traversed and avoid undesirable surface types, we propose a multimodal sensor suite capable of classifying different terrains. We capture high resolution macro images of surface texture, spectral reflectance curves, and localization data from a 9 degrees of freedom (DOF) inertial measurement unit (IMU) on 11 different terrains at different times of day. Using this dataset, we train individual neural networks on each of the modalities, and then combine their outputs in a fusion network. |

|

Occluded Object Detection and Exposure in Cluttered Environments with Automated Hyperspectral Anomaly DetectionNathaniel Hanson, Gary Lvov, Taşkin Padir Frontiers in Robotics and AI, 2022 paper / code / Our approach proposes a new automated method to perform hyperspectral anomaly detection in cluttered workspaces with the goal of improving robot manipulation. We first assume the dominance of a single material class, and coarsely identify the dominant, non-anomalous class. Our work advances robot perception for cluttered environments by incorporating multi-modal anomaly detection aided by hyperspectral sensing into detecting fractional object presence without need for laboriously curated labels. |

|

Hyperbot-A Benchmarking Testbed For Acquisition Of Robot-Centric Hyperspectral Scene And In-Hand Object DataNathaniel Hanson, Tarik Kelestemur, Joseph Berman, Dominik Ritzenhoff, Taşkin Padir IEEE Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), 2022 paper / code / We designed a benchmarking testbed to enable a robot manipulator to perceive spectral and spatial characteristics of scene items. Our design includes the use of a push broom Visible to Near Infrared (VNIR) hyperspectral camera, co-aligned with a depth camera. This system enables the robot to process and segment spectral characteristics of items in a larger spatial scene. |

|

In-Hand Object Recognition with Innervated Fiber Optic Spectroscopy for Soft GrippersNathaniel Hanson, Hillel Hochsztein, Akshay Vaidya, Joel Willick, Kristen Dorsey, Taşkin Padir IEEE International Conference on Soft Robotics (RoboSoft), 2022 paper / We present a novel modular sensing platform integrated into a hybrid-manufactured soft robot gripper to collect and process high-fidelity spectral information. The custom design of the gripper is realized using 3D printing and casting. We embed full-spectrum light sources paired with lensed fiber optic cables within an optically clear gel to collect multi-point spectral reflectivity curves in the Visible to Near Infrared (VNIR) segment of the electromagnetic spectrum. |

Academic OrganizingEfforts in which I participated as one of the core organizers. |

|

2025 IEEE International Symposium on Safety, Security, and Rescue Roboticswebsite / Publicity Chair The safety, security, and rescue robotics community focuses on the ethical use of robots for public safety and security applications, such as law enforcement, anti-terrorism, nuclear decommissioning, and inspection of critical infrastructure, all phases of emergency management (presentation, preparedness, response, and recovery), and humanitarian assistance and disaster relief. |

|

HARDER Workshop @ IEEE RoboSoftwebsite / Lead Organizer This workshop, inspired by the RoboSoft 2024 keynote, will focus on leveraging soft robotics for real-world challenges in humanitarian assistance, disaster relief, environmental monitoring, and exploration (HARDER). The HARDER workshop will pair academic researchers with field practitioners to explore exciting applications and unmet needs in purposeful, inter-disciplinary conversations. |

|

2024 IEEE International Symposium on Safety, Security, and Rescue Roboticswebsite / Publicity Chair, Associate Editor The safety, security, and rescue robotics community focuses on the ethical use of robots for public safety and security applications, such as law enforcement, anti-terrorism, nuclear decommissioning, and inspection of critical infrastructure, all phases of emergency management (presentation, preparedness, response, and recovery), and humanitarian assistance and disaster relief. |

Other ProjectsThese include coursework, side projects and unpublished research work. |

|

Hold 'em and Fold 'em: Towards Human-scale, Feedback-Controlled Soft Origami RobotsImmanuel Ampomah Mensah, Jessica Healey, Celina Wu, Andrea Lacunza, Nathaniel Hanson, Kristen L. Dorsey Competition 2024-01-31 arxiv / website / In this work, we demonstrate proprioceptive (embodied) feedback control of a soft, pneumatically-actuated origami robot; and actuation of these origami origami robots under a person’s weight in an open-loop configuration. This work was a runner-up in the open category of the 2023 Soft Robotics Toolkit Competition. |

|

Material Informed Robotics – Spectral Perception for Object Identification and Parameter InferenceNathaniel Hanson* Dissertation 2023-12-16 paper / Traditional robot perception has focused on recognizing an object’s semantic purpose as proxy to understanding ideal interaction strategies. However, semantic recognition does not include an implicit understanding of the material composition of these objects. Material recognition aids in understanding properties like weight, friction, and deformability. In this dissertation, the identification of material makeup in objects and scenes composed of heterogeneous materials is achieved through the introduction of near infrared (NIR) spectroscopy into robotics. |

|

Pregrasp Object Material Classification by a Novel Gripper Design with Integrated SpectroscopyNathaniel Hanson, Tarik Kelestemur, Deniz Erdoğmuş, Taşkin Padir Preprint 2021-09-15 arxiv / We introduce a novel design for a two fingered gripper with an integrated NIR spectrometer and endoscopic camera to collect VNIR spectral readings and macro surface images from grasped items. We also develop a method based on a nonlinear Support Vector Machine (SVM) to achieve material inference between visually similar items (a smorgasbord of real and artificial fruits) and continually update class estimates with a discrete Bayes filter. |

|

Design and source code from Jon Barron's website |